Introduction

This week’s academic signal cluster is unusually coherent.

Across safety papers, mechanistic interpretability work, neuro-symbolic reasoning, and fresh AI governance briefings, one story keeps repeating:

We’re no longer aligning a single model; we’re governing an internet of agents whose internal reasoning is finally becoming partially inspectable.

The sources we track here do three things:

- Decompose agentic ecosystems into architectures and risk patterns. arXiv

- Open “mechanistic windows” into multi-agent LLM systems so we can see how values and coordination actually play out internally. arXiv+2arXiv+2

- Connect all of this to governance practice and public expectation, reminding us that institutions and citizens are no longer spectators in the AI story. Ada Lovelace Institute+1

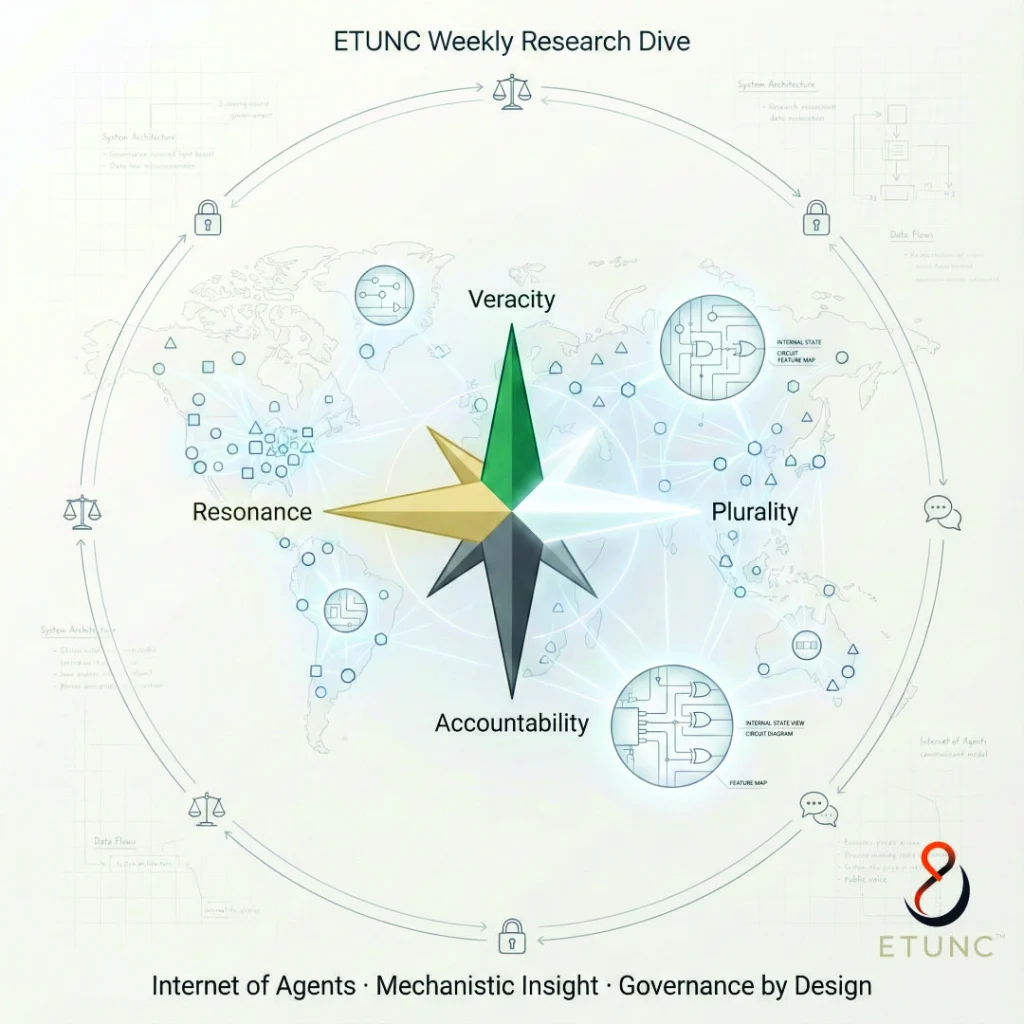

For ETUNC, this is a perfect VPAR week: Veracity through interpretability, Plurality through multi-agent views and public attitudes, Accountability through emergent governance playbooks.

3. Core Discoveries (Paper-by-Paper, VPA view)

3.1 Toward a Safe Internet of Agents arXiv

- What it is (≤150 words)

This paper frames a global “Internet of Agents”: LLM-based and software agents communicating, delegating, and acting across networks. It decomposes agentic AI into an architectural spectrum—from simple tool-wrapped models to fully autonomous, networked ecosystems. Risk is treated as systemic rather than local: coordination failures, cascading errors, and emergent behaviors arise from interactions, not just individual agents. The authors propose an architectural safety analysis: threat models, guardrails, and monitoring strategies at each layer of the ecosystem. - Core insight

Safety is now a systems-architecture problem, not just a single-model problem. - VPA assessment

- Veracity: High—grounded in structured threat and architecture analysis.

- Plurality: Moderate—acknowledges multiple deployment topologies, less on socio-political diversity.

- Accountability: High—explicit focus on where responsibility should sit across the “internet of agents.”

- Why it matters for ETUNC

This strongly reinforces “From Agents to Accountability” and the ETUNC Guardian/Envoy distinction.

3.2 Towards Ethical Multi-Agent Systems of LLMs: A Mechanistic Interpretability Perspective arXiv

- What it is

Lee et al. look at multi-LLM systems—agents coordinating with each other—and ask: Can mechanistic interpretability help us make them ethical? They propose using circuit-level analysis, attention patterns, and internal state probes to understand how agents negotiate goals, share information, and potentially collude or diverge. The work sketches a research agenda: use mechanistic tools not just for single-model circuits but for inter-agent coordination motifs. - Core insight

Ethics in multi-agent LLM systems depends on understanding not only what each model does, but how their internal circuits interact. - VPA assessment

- Veracity: High—solidly grounded in existing mech-interp literature.

- Plurality: High—explicit multi-agent framing invites diverse interaction patterns.

- Accountability: High—interpretability is explicitly cast as an accountability technology.

- ETUNC relevance

This is direct fuel for the “Parallel Minds, Ethical Alignment” line of posts.

3.3 Unsupervised Decoding of Encoded Reasoning via Internal Activations arXiv+1

- What it is

This paper tests mechanistic interpretability tools—specifically logit lens and an automated paraphrasing pipeline—to reconstruct reasoning traces from internal activations alone. The authors show that in many cases, intermediate layers encode surprisingly recoverable “proto-reasoning” that can be translated into human-readable explanations without supervision. - Core insight

Models are often more legible than we thought—if we use the right decoding tools. - VPA assessment

- Veracity: High—empirical evaluation of MI tools.

- Plurality: Moderate—mainly technical, but implies many possible oversight designs.

- Accountability: High—demonstrates a concrete pathway from opaque reasoning to auditable traces.

- ETUNC relevance

This is “Echo of Truth” territory: it strengthens the Veracity + Accountability side of VPAR by showing how internal narratives can be surfaced.

3.4 Probabilistic Neuro-Symbolic Reasoning for Sparse Historical Data arXiv

- What it is

Kublashvili proposes a neuro-symbolic framework that fuses Bayesian inference, causal models, and game-theoretic allocation to reason under sparse historical data. Symbolic structures encode causal and normative constraints; neural components handle pattern extraction and uncertainty. The system is designed for domains where you can’t rely on massive datasets but still need principled, interpretable decisions. - Core insight

Hybrid neuro-symbolic architectures are a pragmatic path to trustworthy reasoning when data is limited but stakes are high. - VPA assessment

- Veracity: High—probabilistic and causal grounding.

- Plurality: High—explicitly models multiple actors and outcome trade-offs.

- Accountability: High—symbolic layer provides an audit trail.

- ETUNC relevance

This is exactly the Hybrid Reasoning thesis our last post advanced.

3.5 AI Governance: A CX Leader’s Guide to Responsible AI Implementation CX Network

- What it is

A practical governance guide aimed at customer-experience leaders, outlining risk categories (shadow AI, vendor risk, misuse), governance roles, and a staged maturity model. It emphasizes aligning AI projects with organizational values and customer trust, not just ROI. - Core insight

The organizations that “win” in AI are not those that move fastest, but those whose governance keeps pace with innovation. - VPA assessment

- Veracity: Moderate—industry-grade, not peer-reviewed, but fairly concrete.

- Plurality: Moderate—addresses multiple stakeholder views (board, CX, IT).

- Accountability: High—governance roles and processes are central.

- ETUNC relevance

This bridges ETUNC theory with board-room practice and hits the “Judgment-Quality AI: Orchestration and Compliance” series squarely.

3.6 Great (Public) Expectations – Ada Lovelace Institute Briefing Ada Lovelace Institute

- What it is

The Ada Lovelace Institute summarizes public attitudes toward AI and calls for governance that reflects those expectations. The report argues that failure to align AI deployment with public values risks deepened inequality, erosion of trust, and policy failure. - Core insight

The public is not a passive bystander; democratic legitimacy is a core alignment constraint. - VPA assessment

- Veracity: High—grounded in survey and attitude research.

- Plurality: High—foregrounds diverse public perspectives.

- Accountability: High—frames responsibility as a democratic, not purely technical, issue.

- ETUNC relevance

This is pure Plurality + Resonance: VPAR as social contract, not just technical spec.

4. Integration with ETUNC Architecture (VPAR & roles)

- Guardian vs Envoy:

- Toward a Safe Internet of Agents gives a blueprint for where Guardians must watch (ecosystem architecture, interoperability, systemic risk) and where Envoys act (local tasks, domain agents). arXiv

- Constitution Library:

- Neuro-symbolic and probabilistic frameworks suggest how constitutional clauses can be encoded as causal rules and symbolic constraints, with neural components handling messy inputs. arXiv+1

- Guardian Analysis Dashboard:

- Mechanistic decoding and multi-agent interpretability papers point toward dashboard features: circuit-level anomalies, inter-agent collusion patterns, and automatically generated “reasoning transcripts” for HITL review. arXiv+2arXiv+2

You’ve already laid much of this foundation in posts like “Parallel Minds, Ethical Alignment,” “From Agents to Accountability,” and “Hybrid Reasoning for Ethical Agentic AI.”

5. Ethical and Societal Context (Two levels)

Academic angle:

- Alignment must treat multi-agent interactions and institutional context as first-class. arXiv+1

- Mechanistic interpretability is evolving from a toy discipline to a realistic oversight tool for encoded reasoning. arXiv+2arXiv+2

- Governance reports show a convergence between research recommendations and board-level guidance on risk, accountability, and transparency. ITU+2PwC+2

TED-Talk Clarity by James:

- Think of the Internet of Agents like a global fleet of self-driving ships. We’re no longer checking one captain; we’re checking trade routes, ports, and shipping lanes.

- Mechanistic interpretability is like installing glass floors in the engine room so auditors can see the gears moving.

- Governance reports and public-attitude briefings are the equivalent of saying: “This shipping network only survives if citizens trust it not to pollute the ocean or secretly move weapons.”

6. Thematic Synthesis / Trends

- From single-model alignment to ecosystem alignment

- Safety is now about networks of agents, not just one LLM. arXiv+1

- Mechanistic interpretability as accountability infrastructure

- The shift from “nice to have” to “we can decode the reasoning trace and show it to a regulator or a family member.” arXiv+3arXiv+3arXiv+3

- Neuro-symbolic hybrids as ETUNC-native tech

- Exactly the mix of logic + learning you need for VPAR-driven constitutions and Guardian rules. Medium+3arXiv+3arXiv+3

- Governance catching up—and public expectations hardening

- Institutional guides and public-attitude briefings are starting to “speak the same language” as the research—risk-based, multi-stakeholder, alignment-aware. Ada Lovelace Institute+2CX Network+2

8. Suggested Resource Links

Internal (ETUNC archive)

- Hybrid Reasoning for Ethical Agentic AI: ETUNC Weekly Insights (2025-12-01)— establishes the hybrid reasoning baseline for this dive.

- Social Hybrid Intelligence: The New Architecture of Trust for Agentic AI Systems — social/agentic framing for multi-agent governance.

- From Agents to Accountability: The New Frontier in Verifiable, Ethical Intelligence— core link from single agents to system accountability.

- Judgment-Quality AI: Orchestration and Compliance in the Era of Ethical Intelligence — governance + orchestration design space.

External (this week’s sources)

- Toward a Safe Internet of Agents arXiv

- Towards Ethical Multi-Agent Systems of LLMs: A Mechanistic Interpretability Perspective arXiv

- Unsupervised decoding of encoded reasoning using internal activations arXiv+1

- Probabilistic Neuro-Symbolic Reasoning for Sparse Historical Data arXiv

- AI Governance: A CX Leader’s Guide to Responsible AI Implementation CX Network

- Great (Public) Expectations – Ada Lovelace Institute Ada Lovelace Institute

9. Conclusion

This week, the research conversation quietly confirmed what ETUNC has been building toward:

- Alignment is a living, social, and architectural problem.

- Internal reasoning is no longer an opaque mystery; it’s an emerging audit surface.

- Governance is finally getting the language—and tools—to match the complexity of agentic systems.

VPAR isn’t just a philosophy; it’s increasingly a research-backed design requirement for any serious “internet of agents.”

10. Call to Collaboration

We invite:

- Researchers exploring agentic safety, mechanistic interpretability, and neuro-symbolic methods

- Governance practitioners designing AI oversight playbooks

- Institutions seeking judgment-quality AI for high-stakes domains

…to collaborate on VPAR-aligned architectures, benchmarks, and governance patterns that make the Internet of Agents safe, plural, and accountable by design.

Collaborate → ETUNC.ai/Contact