Introduction

The week of 2025-11-24 to 2025-12-01 delivered pivotal research on neuro-symbolic reasoning, decentralized agentic infrastructures, and introspective LLMs — all of which reinforce the path ETUNC is carving toward Judgment-Quality Intelligence.

A unifying theme has become impossible to ignore:

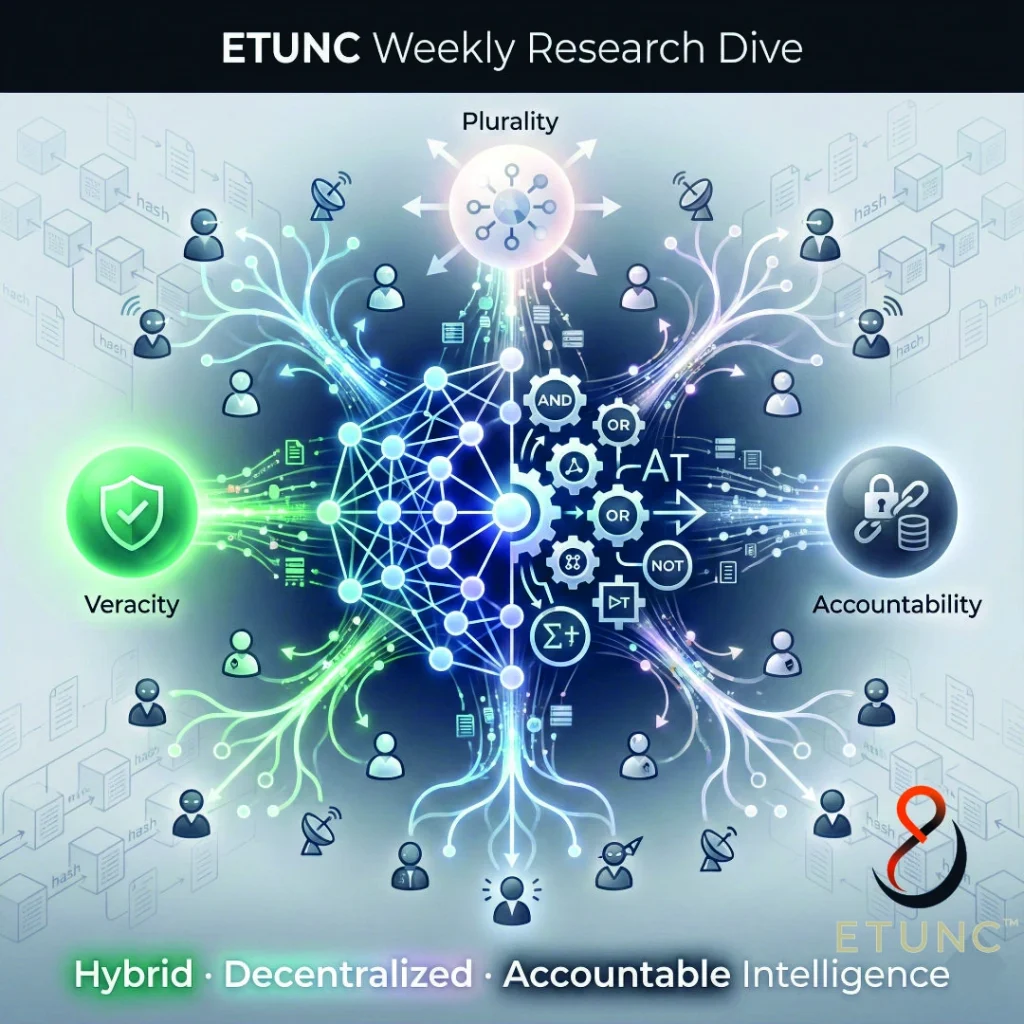

The future of trustworthy AI requires hybrid reasoning, decentralized orchestration, and verifiable cognition.

Each of the five research sources reviewed aligns directly with the ETUNC Compass — Veracity, Plurality, Accountability — and confirms that the ETUNC architecture is not only timely but positioned ahead of global structural shifts in AI governance and agentic design.

This week’s research demonstrates that ETUNC’s hybrid, neuro-symbolic, agentic execution model is emerging as the reference architecture for high-stakes cognitive systems.

Section 1 — Core Discovery or Research Theme

1. Neuro-Symbolic AI for Auditable Cognitive Extraction (Nature/PMC)

Published 10 days ago

A medical-domain neuro-symbolic system has achieved 100% accuracy by combining GPT-4 with a deterministic rule-based expert verifier. The symbolic layer validated or rejected LLM outputs based on domain rules and produced a fully auditable chain of reasoning.

Why it matters:

This is a direct validation of ETUNC’s Hybrid Reasoning Model (HRM) — the Guardian symbolic overlay is not optional; it is the mechanism that ensures Veracity and auditability in high-consequence domains.

VPA Analysis:

- Veracity: High — symbolic grounding prevents hallucinations

- Plurality: Low — domain-specific

- Accountability: High — transparent reasoning chain

ETUNC Integration:

Integrate the “symbolic validator” as a parallel Guardian layer that checks every Resonator output against codified rules, policies, and provenance requirements.

2. Hyperon/ASI Chain — Scalable Decentralized Intelligence (SingularityNET)

Published 7 days ago

Hyperon reveals a fast compiler for MeTTa, a cognitive reasoning language, and formally launches the ASI Chain DevNet — enabling smart-contract-validated cognitive logic across decentralized networks.

Why it matters:

This establishes the blueprint for ETUNC’s Envoy and Coordinator layers:

- decentralized cognition

- verifiable agentic commitments

- cryptographically secured reasoning

- multi-agent orchestration at scale

VPA Analysis:

- Veracity: High — cryptographic provenance

- Plurality: High — heterogeneous agents

- Accountability: High — blockchain-level audit logs

ETUNC Integration:

Evaluate ASI Chain primitives (MeTTa + Rholang) as a model for Envoy protocol design, ensuring tamper-proof orchestration and multi-agent verifiability.

3. Signs of Introspection in LLMs (Anthropic)

Published 3 days ago

Anthropic identifies early-stage mechanisms where LLMs may access or report on their own internal states — a primitive introspection capacity.

Why it matters:

This is foundational for ETUNC’s Resonator self-assessment, enabling future capability to:

- evaluate its own uncertainty

- declare reasoning gaps

- flag ethical conflicts

- self-limit risky recommendations

VPA Analysis:

- Veracity: Medium

- Plurality: Medium

- Accountability: Medium-High

ETUNC Integration:

Prototype internal prompting routines allowing the Resonator to provide explicit confidence scoring, internal state descriptions, and reasoning transparency.

4. Transparent Machine Reasoning for Industry 5.0 (MDPI)

Published 26 days ago

A formal linkage is drawn between XAI, counterfactual reasoning, and measurable accountability metrics within Industry 5.0 systems.

Why it matters:

This provides empirical support for ETUNC’s commitment to Trust Calibration and the measurable evaluation of AI alignment — especially crucial for enterprise and institutional deployments.

VPA Analysis:

- Veracity: High

- Plurality: Low

- Accountability: High

ETUNC Integration:

Adopt the paper’s measurable indicators as part of the Guardian HITL Validation Benchmarks, defining what “safe enough” means in ETUNC deployments.

5. Agentic AI: Comprehensive IEEE Survey (IEEE Xplore)

Published 10 days ago

The survey defines Agentic AI as systems capable of:

- dynamic planning

- multi-agent orchestration

- memory utilization

- adaptive reasoning

It differentiates agentic systems from traditional reactive AI.

Why it matters:

This validates ETUNC’s architecture: the Envoy-Coordinator hierarchy is the design pattern for the next decade of agentic systems.

VPA Analysis:

- Veracity: Medium

- Plurality: High

- Accountability: Medium

ETUNC Integration:

Use these frameworks to refine ETUNC’s 18-month agentic roadmap, ensuring global alignment with academic and industry definitions.

Section 2 — Integration with ETUNC Architecture

Three clear architectural imperatives emerge from this week’s findings:

1. Hybrid Reasoning Is Mandatory for Judgment-Quality AI

The symbolic layer is not decorative — it is the compliance engine that enforces Veracity and prevents hallucinations.

ETUNC Action

Build the Guardian Symbolic Overlay MVP:

- 5 deterministic rules

- full audit trail logging

- Resonator ↔ Guardian dual-channel validation

2. Agentic Orchestration Must Be Decentralized

Centralized systems cannot deliver verifiable intelligence at scale. Hyperon, MeTTa, and ASI Chain show the future:

- decentralized

- cryptographically validated

- heterogeneous agent orchestration

ETUNC Action

Study ASI Chain consensus mechanics to integrate a verifiable orchestration ledger into the ETUNC Coordinator.

3. Internal Introspection Enables Ethical Self-Regulation

Anthropic’s introspection research opens a future where LLMs can flag:

- uncertainty

- inconsistency

- risk

- missing information

ETUNC Action

Implement introspection-prompting to strengthen the Resonator’s metacognitive capacity.

Section 3 — Ethical and Societal Context

This week demonstrates a paradigm shift from:

black-box prediction → transparent, accountable cognitive systems.

Key implications:

- Society will demand provable reasoning from institutional AI.

- Regulators will require auditability, traceability, and policy alignment.

- Organizations will rely on hybrid reasoning to avoid compliance failures.

- Individuals will expect contextual fidelity in digital legacy systems.

ETUNC is architecturally aligned to this trajectory:

Veracity ensures factual grounding.

Plurality ensures perspective diversity.

Accountability ensures provable responsibility.

This triple alignment is emerging as the global standard for ethical cognition.

Section 4 — Thematic Synthesis / Trends

Three macro-trends are consolidating:

Trend 1 — Neuro-Symbolic Architecture Becomes the Trust Standard

Any system operating in healthcare, law, governance, or corporate memory must combine neural inference with symbolic constraint.

Trend 2 — Decentralized Cognition Outperforms Monolithic AI

Distributed agents outperform single models in verifiability, resilience, and cross-domain reasoning.

Trend 3 — Introspective AI Systems Will Become the Norm

AI that can analyze its own reasoning will outperform systems that can’t explain themselves.

Together, these trends form the “Judgment-Quality AI” landscape — and ETUNC’s architecture sits directly on this convergence point.

Suggested Resource Links

ETUNC Internal

- Judgment-Quality AI Orchestration

- Bridging Intelligence and Integrity: Hybrid Reasoning and Agentic Governance in AI

Academic / External

- Nature/PMC – Neuro-Symbolic Medical Verification

- SingularityNET – Hyperon & ASI Chain

- Anthropic – LLM Introspection Study

- MDPI – Transparent Machine Reasoning

- IEEE – Agentic AI Comprehensive Survey

Conclusion

This week’s research clearly indicates that ETUNC is aligned with the inevitable future of AI governance, enterprise intelligence, and ethical digital legacy systems.

The world is migrating from probabilistic text generators to verifiable cognitive architectures — and ETUNC is positioned to lead this transition.

The immediate next steps are to:

- finalize symbolic rule validation in Guardian

- begin decentralized orchestration prototyping

- integrate introspective reasoning structures

ETUNC is architecting the next era of trustworthy cognition — one where truth is verifiable, perspectives are encoded, and accountability is enforceable.

Call to Collaboration

Researchers, architects, and ethical designers interested in building Judgment-Quality Intelligence are invited to collaborate with ETUNC.

We welcome partnerships across AI ethics, agentic governance, digital legacy systems, and institutional knowledge preservation.

Pingback: ETUNC Weekly Research Dive – Nov 29–Dec 8, 2025: Agentic Architectures, Mechanistic Windows, and Governance at Scale - ETUNC: Executor of Legacy