Introduction

Artificial intelligence is undergoing a decisive shift. The era of single, monolithic models attempting to mimic generalized intelligence is giving way to Social Hybrid Intelligence (SHI) — the coordinated collaboration of humans and specialized AI agents operating within shared cognitive spaces. Over the past two weeks, research from arXiv, preprints, and technical blogs has highlighted this transformation with unprecedented clarity.

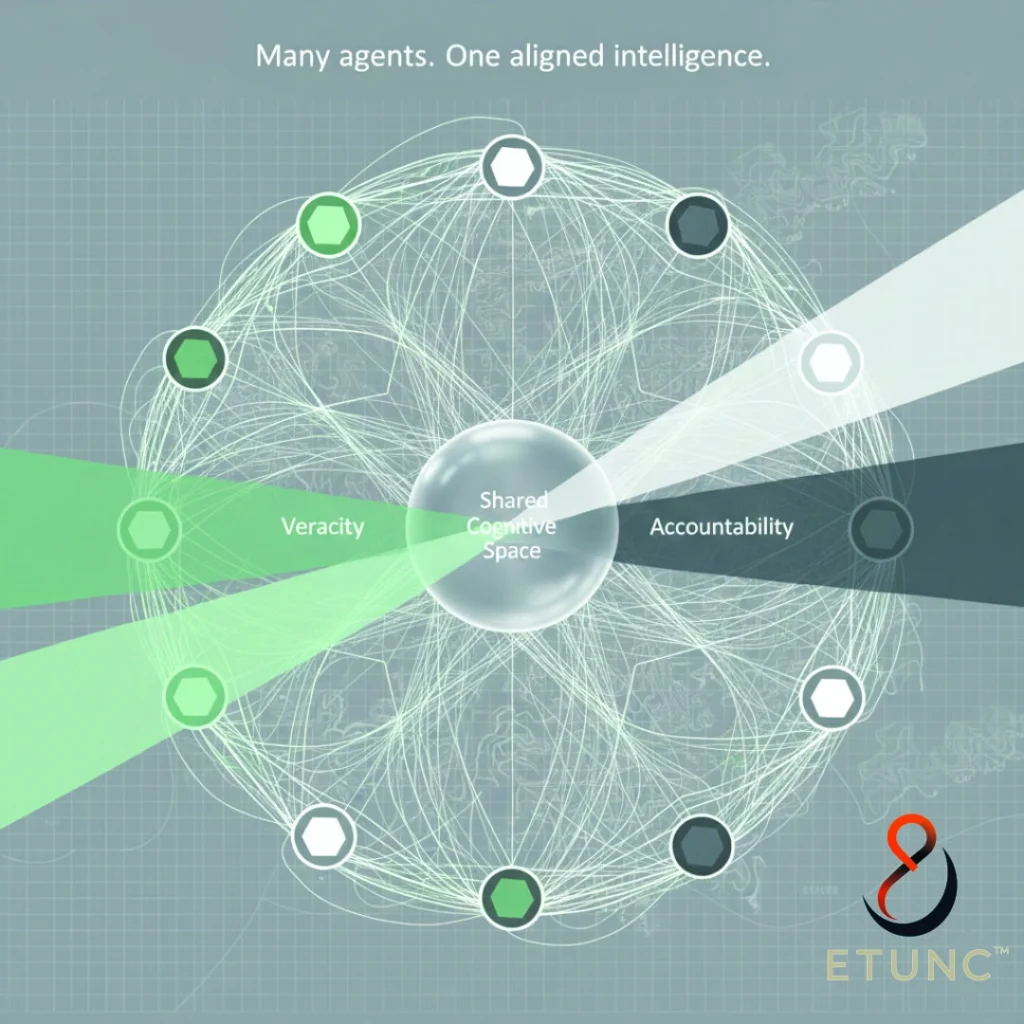

For ETUNC, this shift is not a surprise — it is validation. The Guardian–Envoy–Resonator architecture anticipates exactly what current research now confirms: scalable trust in AI systems requires plural reasoning, verifiable truth-anchoring, and structured accountability across networks of agents and humans.

This week’s research cluster underscores three major themes:

- Unified Human–AI Cognitive Spaces

- Neuro-Symbolic Imperatives for Reliability

- Governable, Transparent Agentic Orchestration

These are not just technical advances — they are foundational pillars for building Judgment-Quality AI. And they map directly onto ETUNC’s Compass of Veracity, Plurality, and Accountability (VPA).

Section 1 — Core Research Themes

Source 1 — Human-Machine Social Hybrid Intelligence (HMS-HI)

arXiv, Oct 28, 2025

This study introduces the Social Hybrid Intelligence framework, a leap beyond traditional Human-in-the-Loop models. Human experts and LLM-powered agents exist not in hierarchy, but as peers within a Shared Cognitive Space (SCS) — a unified context model ensuring that every participant maintains aligned situational awareness.

Why it matters:

This is the first formal architecture that mirrors ETUNC’s Envoy and Resonator interplay. The Dynamic Role and Task Allocation (DRTA) mechanism closely resembles ETUNC’s Envoy mediation layer: perception agents, reasoners, and validators dynamically exchange roles to optimize clarity and correctness.

VPA Alignment:

- Veracity: Shared cognitive grounding prevents divergent realities.

- Plurality: Diverse agents + human expertise = richer interpretive range.

- Accountability: Roles are assigned, tracked, and auditable.

Source 2 — Agentic AI: A Comprehensive Survey

arXiv, Oct 29, 2025

This sweeping survey classifies agentic systems into Symbolic/Classical and Neural/Generative lineages. The conclusion is explicit: the future of reliable agentic systems demands neuro-symbolic fusion.

Why it matters:

This is direct scholarly confirmation of ETUNC’s Guardian design. High-stakes, ethical decision-making cannot emerge from neural models alone. Symbolic logic — rules, constraints, causal reasoning — must operate as the backbone.

VPA Alignment:

- Veracity: Symbolic logic enforces falsifiability.

- Plurality: Neural + symbolic = cognitive diversity by design.

- Accountability: Symbolic planning produces inherent audit trails.

Source 3 — Interpretability as Internal Alignment

arXiv, Sep 10, 2025

The paper argues for mechanistic interpretability as the primary alignment target — not external behavior. Understanding and manipulating internal representations becomes essential to ensuring trustworthy autonomy.

Why it matters:

ETUNC’s Resonator layer depends on internal transparency. This research confirms that evaluators must see why an agent reached a conclusion, not just whether the output appears correct.

VPA Alignment:

- Veracity: Internal structures can be tested for truth consistency.

- Plurality: Exposes multiple reasoning pathways rather than a single opaque output.

- Accountability: Enables true causal tracing within the agent’s logic flow.

Source 4 — The Manager Agent Challenge for Human-AI Teams

arXiv, Oct 2, 2025

This paper identifies the core obstacle in multi-agent/human-AI collaboration: orchestration. The proposed solution is a Manager Agent that performs dynamic task partitioning, progress monitoring, and contextual mediation.

Why it matters:

This is a near-perfect analog to ETUNC’s Envoy module. It confirms that complex systems require a dedicated coordination agent responsible for coherence, flow, and task governance.

VPA Alignment:

- Veracity: Structured workflows reduce reasoning drift.

- Plurality: Optimizes specialized agent contributions.

- Accountability: One coordinating agent becomes an auditable anchor.

Source 5 — Decentralized Multi-Agent Reinforcement Learning (MARL) Advances

Research Blog, Nov 13, 2025

This research explores decentralized cooperation without central controllers, emphasizing distributed optimization for resource allocation and network flow.

Why it matters:

ETUNC’s Proxy nodes rely on localized autonomy with global validation. MARL research validates the feasibility of distributed cognition with agents learning through decentralized feedback loops — but also highlights accountability challenges.

VPA Alignment:

- Veracity: Local agents risk divergence — requiring centralized validation (Resonator).

- Plurality: Distributed cognition thrives on diverse local perspectives.

- Accountability: MARL systems lack inherent traceability — confirming ETUNC’s necessity.

Section 2 — Integration With ETUNC Architecture

Across all five sources, the alignment with ETUNC’s VPA architecture is unmissable:

Human–AI Cognitive Fusion → Envoy + SCS

Shared Cognitive Spaces support ETUNC’s Envoy in maintaining synchronized context across agents and humans.

Neuro-Symbolic Reasoning → Guardian Layer

Neural models provide adaptability; symbolic planning provides reliability — exactly how the Guardian operates.

Internal Interpretability → Resonator Layer

ETUNC’s Resonator becomes the mechanistic interpreter, tracing reasoning paths for ethical and causal validation.

Dynamic Orchestration → Envoy’s Manager Function

The Envoy acts as a Manager Agent, decomposing tasks and coordinating human-AI workflows.

Distributed Learning → Proxy Nodes

Proxy agents can autonomously adapt, while the Resonator ensures global alignment and auditability.

These research findings do not merely support ETUNC’s architecture — they predict it.

Section 3 — Ethical and Societal Context

The movement toward Social Hybrid Intelligence raises urgent ethical questions:

Who defines truth in a multi-agent system?

ETUNC solves this through Veracity anchored in symbolic logic, factual grounding, and multi-perspective synthesis.

How do we prevent cognitive monoculture?

Plurality ensures that conflicting perspectives are preserved, compared, and reconciled.

How do we prevent untraceable, emergent failures?

Accountability provides explicit reasoning trails, identity tracking, and governance gates for human oversight.

In a world facing polarized narratives, deepfakes, synthetic persuasion, and institutional distrust, VPA is not merely an architecture — it is a path to reclaiming epistemic stability.

Section 4 — Thematic Synthesis / Trends

Three dominant trends emerge:

Trend 1 — AI Is Becoming a Social Actor

Not socially aware, but socially embedded. Agents now operate in teams — with humans, symbolic systems, and other AI units — each contributing specialized cognition.

Trend 2 — Neuro-Symbolic Systems Are Non-Optional

Symbolic reasoning isn’t returning — it’s dominating the reliability conversation. Alignment now requires explainable structures and logical constraints.

Trend 3 — Governance Is the New Frontier

The world now understands that trust in agentic AI will not come from model size but from:

- structured oversight,

- transparent reasoning,

- accountable orchestration, and

- ethical constraint layers.

This trend positions ETUNC’s VPA as a category-defining approach.

Suggested Resource Links

ETUNC Internal

- Parallel Minds, Ethical Alignment: How Cutting-Edge AI Research Validates ETUNC’s VPAR Architecture for Judgment-Quality AI

- Judgment-Quality AI: How Multi-Agent Orchestration Validates ETUNC’s Ethical Design

Academic

- Social Hybrid Intelligence frameworks (arXiv: Oct 2025)

- Neuro-Symbolic Agentic AI Survey (arXiv: Oct 2025)

- Interpretability as Internal Alignment (arXiv: Sep 2025)

- Manager Agent Research (arXiv: Oct 2025)

- MARL Distributed Optimization Papers

Conclusion

The last two weeks mark a decisive inflection point in AI research. The most respected labs and scholars now converge on principles that ETUNC has championed from its outset:

- Truth must be verifiable.

- Perspectives must be plural.

- Agents must be accountable.

What emerges is a coherent picture of the coming decade: AI will not be a monolithic oracle, but a constellation of accountable, coordinated agents operating in partnership with humans.

And ETUNC stands at the forefront of implementing this paradigm as a Living Intelligence System capable of scaling trust, ethics, and institutional continuity into the future.

Call to Collaboration

ETUNC invites researchers, institutions, and ethical AI practitioners to join the development of VPA-aligned agentic systems.

Together, we can define the governance infrastructure for trustworthy autonomous intelligence.

Pingback: ETUNC Weekly Research Dive – Nov 29–Dec 8, 2025: Agentic Architectures, Mechanistic Windows, and Governance at Scale - ETUNC: Executor of Legacy

Pingback: Judgment-Quality AI at Scale: Interpretable Alignment, Hybrid Reasoning, and Distributed Agent Governance - ETUNC: Executor of Legacy