Introduction: The Shifting Paradigm of AI Intelligence & Alignment

The discourse within the leading echelons of AI research is undergoing a profound transformation. For years, the pursuit of Artificial General Intelligence (AGI) focused heavily on building increasingly vast, monolithic models—systems that aim to encompass all knowledge and reasoning within a single, ever-growing neural network. While impressive, this approach has revealed inherent complexities, particularly around managing biases, ensuring verifiable reasoning, and achieving genuine alignment with nuanced human values.

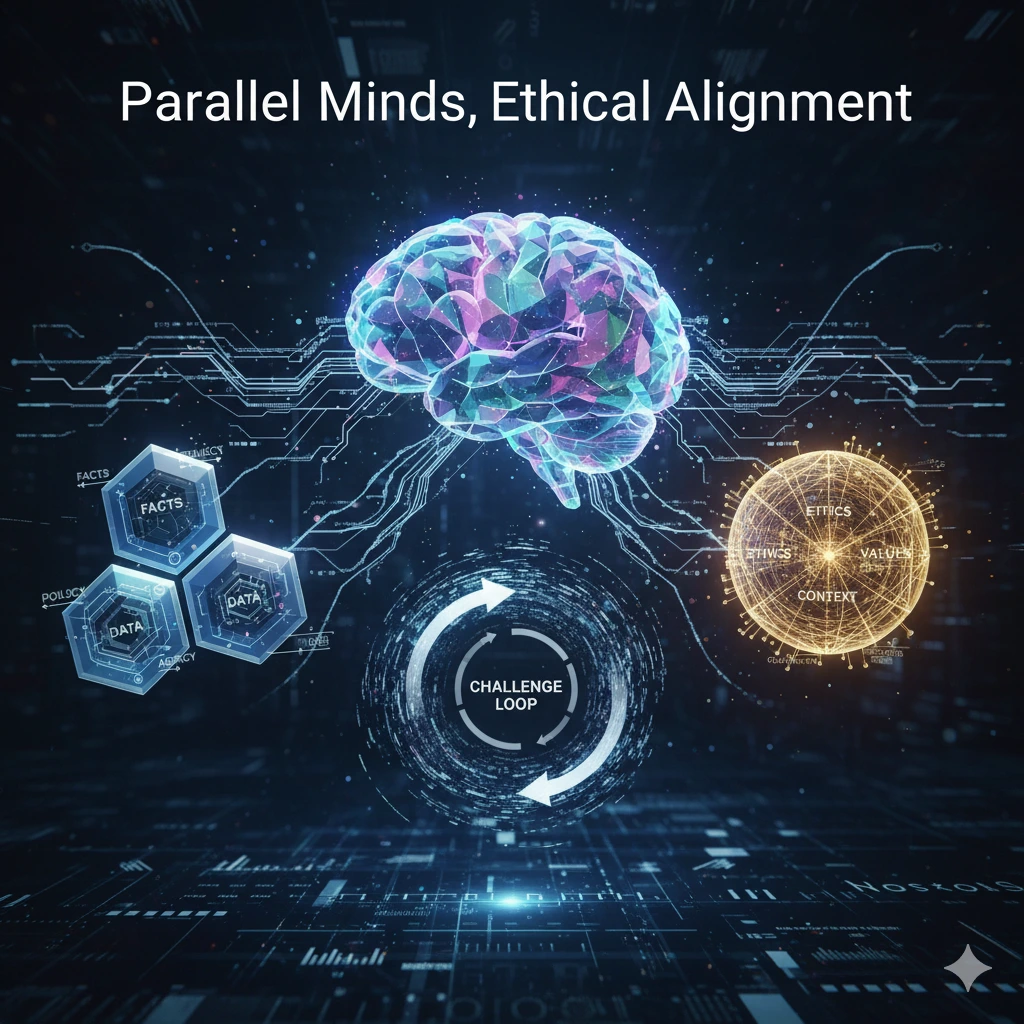

Today, a powerful new paradigm is emerging: the concept of “parallel thinking” or “splitting AI into multiple minds.” This innovative research explores how specialized, distinct AI instances can collaborate, audit each other, and combine their cognitive strengths to solve complex problems more effectively, enhance overall intelligence, and crucially, improve alignment. This shift acknowledges that true intelligence, and indeed true wisdom, often arises from the interplay of diverse perspectives, critical challenge, and iterative refinement. It is a direct response to the limitations of single-minded systems and the burgeoning need for AI that is not only intelligent but also trustworthy, transparent, and ethically sound.

At ETUNC.ai, this emerging research brings a profound sense of validation. From our inception, the VPAR (Veracity, Plurality, Accountability, Resonance) model was architected precisely on this principle of distributed, specialized AI cognition. While the broader AI community is now deeply exploring how to build such multi-agent systems for enhanced alignment, ETUNC has been engineering, refining, and deploying them as the core of our Judgment-Quality AI framework.

The VPAR Model: A Foresightful Multi-Agent Architecture

Our mission at ETUNC.ai is to usher in an era of Judgment-Quality AI – systems that don’t just process data but render decisions that are factually accurate, ethically aligned, contextually wise, and fully accountable. To achieve this, we recognized early on that a singular AI entity, however powerful, would invariably struggle with the inherent plurality of human values and the nuanced complexities of ethical decision-making.

This is where the VPAR model diverges. We engineered our architecture to embody the very essence of “splitting AI into multiple minds,” creating a dynamic interplay between specialized agents:

- The Envoy Agents: Minds of Veracity and Plurality. Our Envoy agents are purpose-built to act as the factual, policy-driven “minds” of the system. Each Envoy can be deeply specialized—trained on a client’s specific legal documents, internal policies, or industry regulations. When a complex query is presented, multiple Envoy agents can be engaged, each bringing its specialized factual domain knowledge and interpretation. This inherent Plurality is critical; it ensures that diverse factual perspectives, even conflicting ones, are brought to the forefront, countering the narrowness that can plague monolithic systems. Their core directive is Veracity – grounding every piece of information in immutable, auditable sources.

- The Guardian Agent: The Mind of Accountability and Resonance. Complementing the Envoy’s factual rigor is the Guardian agent, representing the ethical and contextual “mind.” The Guardian’s role is not to generate facts, but to critically evaluate the Envoy’s factual output against the client’s deeply enshrined “True North”—their core values, ethical constitution, and a rich library of past ethical precedents. The Guardian ensures Accountability by constantly assessing potential ethical blind spots, value conflicts, or areas where a purely factual response might lack contextual wisdom. Its ultimate goal is to guide the system towards Resonance – decisions that are not just factually correct, but also ethically “right” and deeply aligned with organizational values.

The interaction between these distinct “minds” is orchestrated through our innovative Challenge Loop. This isn’t a simple hand-off; it’s a dynamic, iterative dialogue. When the Guardian identifies a potential ethical misalignment or a lack of Resonance in an Envoy’s output, it issues a specific “Challenge”—a prompt for the Envoy to refine its response, to consider a different ethical angle, or to contextualize its findings more deeply. This loop continues, with each agent refining its perspective, until a truly resonant and ethically robust judgment is achieved. It is, in essence, ETUNC’s engineered solution for multi-agent alignment and verifiable consensus-building.

Validation in Action: Solving for Intelligence and Alignment

Recent research underscores that this approach of specialized “minds checking each other” is a powerful mechanism for both boosting AI intelligence and ensuring robust alignment.

- Boosting Intelligence through Specialization and Synthesis: The VPAR model’s distributed cognition directly contributes to more comprehensive and contextually rich outputs. By leveraging multiple specialized Envoy agents, the system can synthesize information from diverse factual domains. This parallel processing of specialized knowledge, followed by the Guardian’s ethical synthesis, mirrors the human capacity for integrating analytical thought with wisdom. The result is a decision that is more robust, less prone to oversimplification, and richer in actionable insights than what a single, broad AI could achieve alone.

- Ensuring Alignment through Mutual Audit and Iterative Refinement: Perhaps the most significant validation from current research lies in the Challenge Loop’s function as a live, dynamic alignment mechanism. Researchers are exploring how different AI instances can audit each other’s work to catch errors or ensure adherence to rules. The Guardian agent precisely fulfills this role for ETUNC. It doesn’t just “check” for compliance; it actively “challenges” the factual output to force ethical considerations and guide refinement. This iterative ethical dialogue is paramount in preventing accidental ethical drift, mitigating inherent biases, and ensuring that the final judgment actively promotes Resonance with the client’s values. It brings the theoretical concept of “minds checking minds” into practical, operational reality.

- The Power of Verifiable Provenance for Trust: In a world where AI decisions are becoming increasingly opaque, the VPAR model brings unparalleled transparency. Our Ethical Source Trace and Contextual Provenance provide an immutable, auditable record of this entire multi-agent interaction. Every piece of factual data used, every policy consulted by an Envoy, every ethical principle invoked by the Guardian, and every step of the Challenge Loop is meticulously logged. This provides a complete, verifiable audit trail for how the parallel “minds” interacted to arrive at a judgment, directly addressing critical concerns about accountability in complex AI systems.

Beyond Research: ETUNC’s Deployable Ethical Framework

While leading research labs are discussing the theoretical underpinnings and experimental implementations of multi-agent alignment, ETUNC.ai is actively deploying these principles today. We have transitioned from concept to reality, offering a comprehensive, deployable framework for ethical AI:

- The Constitutional Studio: This is where clients define their organizational “True North”—their core values, ethical principles, and desired decision-making patterns. It’s the foundational training ground that guides our parallel AI minds, providing them with the ethical compass for their distributed cognition.

- Certified Ethical Architects (CEAs): We understand that the human element remains paramount. Our CEAs are highly trained Human-in-the-Loop experts who oversee and calibrate this multi-agent alignment process. They adjudicate complex Challenge Loops, refine constitutional principles, and ensure that the symbiotic intelligence of Envoy and Guardian consistently delivers Judgment-Quality AI.

- The “ETUNC Inside Compass” Vision: This multi-agent architecture is robust enough for enterprise cloud deployments and efficient enough for the emerging era of ubiquitous, offline AI at the edge. Our vision for the “ETUNC Inside Compass”—embedding a resident Resonator directly into robotics and local devices—leverages the specialized and lightweight nature of our agents, proving that ethical alignment can and should happen directly where decisions are made.

Conclusion: Leading the Era of Judgment-Quality AI

The convergence of cutting-edge AI research with ETUNC’s foundational architectural principles is no coincidence. It underscores a fundamental truth: the future of AI isn’t solely about maximizing intelligence, but about achieving judgment-quality intelligence – AI that is not only powerful and efficient but also ethically aligned, transparently accountable, and deeply trustworthy.

ETUNC.ai stands at the forefront of this evolution. By proactively embracing the power of parallel thinking and multi-agent systems, we are delivering robust, deployable solutions that meet the ethical demands of today and anticipate the complexities of tomorrow. We are not just building AI; we are building the ethical operating system for the next generation of intelligent systems, ensuring a future where advanced AI truly serves humanity with wisdom and integrity.

Suggested Resource Links:

Pingback: Legacy as the Brain’s Final Prediction - ETUNC: Executor of Legacy

Pingback: Social Hybrid Intelligence: The New Architecture of Trust for Agentic AI Systems - ETUNC: Executor of Legacy